Building AI products for kids

Note #3: Lessons learnt building an AI story universe for kids

This is the last of three notes on the Awdio journey. 1. The Lean-Back Problem: Interactive Audio 2. Behind the Scenes: An interactive audio app for kids 3. Building AI products for kids

First, what do kids think about AI anyway?

When we started Awdio, our thesis and a core principle were that kids expect and deserve agency. Minecraft, Roblox, Toca Boca and more have shown that you can shape and bend the things on screen as you wish. These sandbox-like experiences have shifted the expectations for kids to be more in the driver’s seat.

AI makes this happen with everything. Suno your songs. Midjourney you images. Chat your stories. LLM drives agency to heights we’ve never seen. Without knowing anything about the craft, you can now create in that craft. That is a superpower.

I’ll return to what kids thought about what we built and the experience. Let’s first just state that having a superpower is fun. This is something we noticed straight away in our first rough prototypes.

From Prototype to Product

First Prototypes

Early in 2023, we began experimenting with AI-generated stories, spurred on by the launch of ChatGPT, and even more so by the launch of ChatGPT 3.5, which really changed the landscape of what was possible. Like many others, we wanted to see how AI could generate stories, how good they were, and how well they could keep track of characters, their personalities, previous storylines, and so on.

We started simple: input a name and a superpower, and boom, you’ve got a origin story about a hero. What was striking were two things:

The reaction to the backstory was often, "Yeah, that was exactly how it should be" in terms of kids hearing what they had fantasized about. The output matched their imagination.

It was a promise of a bigger world. The origin story hinted at something grander, a whole universe of characters and plots that could spiral out from that initial seed. Kids started imagining the next story.

We realized we weren’t just creating isolated stories; we were building a world—a kind of DIY Harry Potter universe where kids could continually add more depth, more characters, and more adventures.

When we compared the interactive audio app testing to the simple web prototypes we built, it was clear that the rough prototypes won every time. Why? Because 1) “it is mine”, 2) it sparked imagination, and 3) it still used the strength of the lean-back listening experience of audio. Even when the execution was rough, the seed of what the future for the character could hold was enough to create that early excitement.

Crossing the AI Chasm: Going from a “Wow” demo to retention

The main hurdle for building novel entertainment products in AI has been how do you go from a cool demo to a product that someone uses every day or every week?

This question was the challenge we talked about when looking at the prototype, how do we build on this promise of a larger world and make it something you want to come back to and continue to explore?

Many story creators today—especially in the educational or kids' space—focus on creating a single, isolated story. While there’s a lot of merit in that approach, we saw the potential for something bigger. We wanted to create a path where kids could generate a world that would lead to multiple storylines, characters, and arcs.

The Chatbot UI doesn’t really work for entertainment products1, it works great for general purpose products where you want to be free explore different areas. But we wanted to take you on a journey where you could explore the world you built little by little. So that you create a connection to the characters and places in the world while going into it.

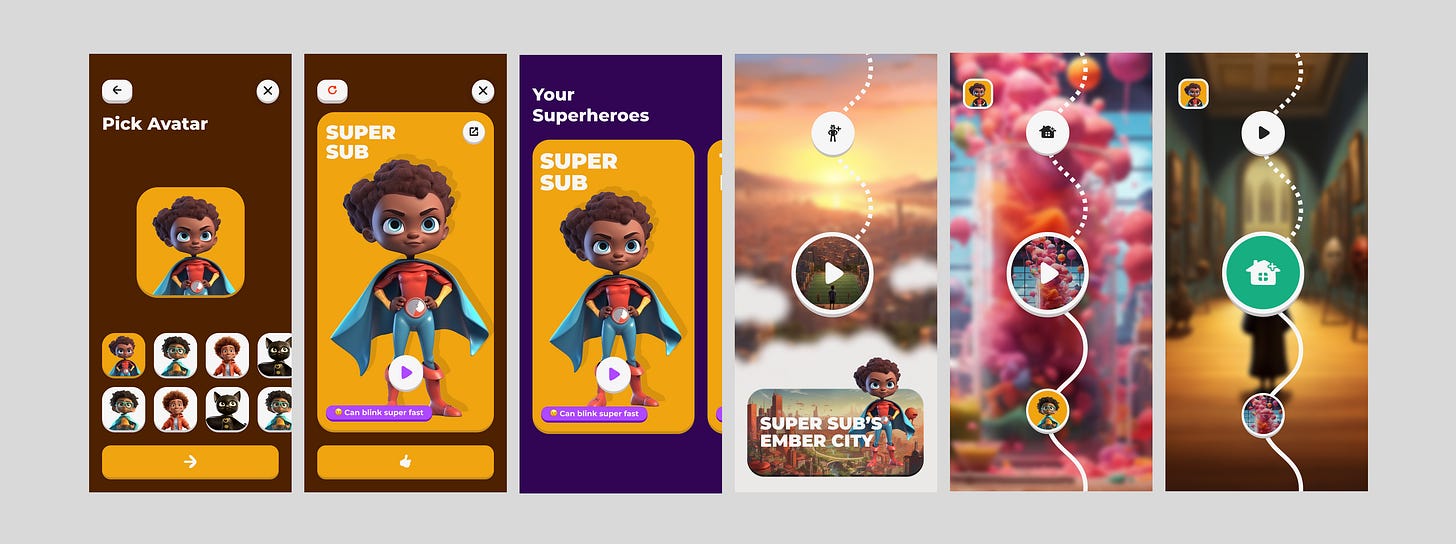

For the first version we took inspiration from the Duolingo and other path like experience, where you have a visual representation of your progress and how much you’ve done. Our hypothesis was that this would allow us to show that there’s a lot more content here, but also that you need to come back and do it again and again to get the whole story.

Then there’s a lot of how the mechanics of this should actually work, for example: is it a daily or weekly story? Can you listen all at once? How often is new content coming out? What does new look like in this context?

Superland V1: Our first attempt at crossing the AI chasm

We started building an app called Superland. Our goal was to create a world that was consistent and allowed kids to go deeper, and getting some evidence that kids were keen on continuing the stories. We asked Katrine to set up a very light story bible around it. Here are some short examples from it:

Where: Ember City

Ember City is a mismatch of old buildings and new, but all buildings have a red roof and when the sun hits on its way up and down all the city glows as embers in a dying fire. But dusk and dawn is the most dangerous times to be out as crooks and villains roam the streets. Only in the luscious green parks are you safe. Until now.

The evil mayor

The Mayor is aspiring to be president of the country one day. He sees his mayor job as a stepping stone. He is obsessed with money and quick fixes and doesn’t believe in rehabilitation of criminals. He has a caged bird in his office that he both loves and thinks is making to much noise.

The plot

Ember City is growing and nature is struggling. The mayor doesn’t care about nature and only wants progress. Our hero is the only one that really understands the importance of nature and wants to protect it. The hero’s school is a mirror of what is happening in the big city and the Villain generated is trying to fight the hero here.

We then made 20 “chapters” of this story. Each chapter had the following structure:

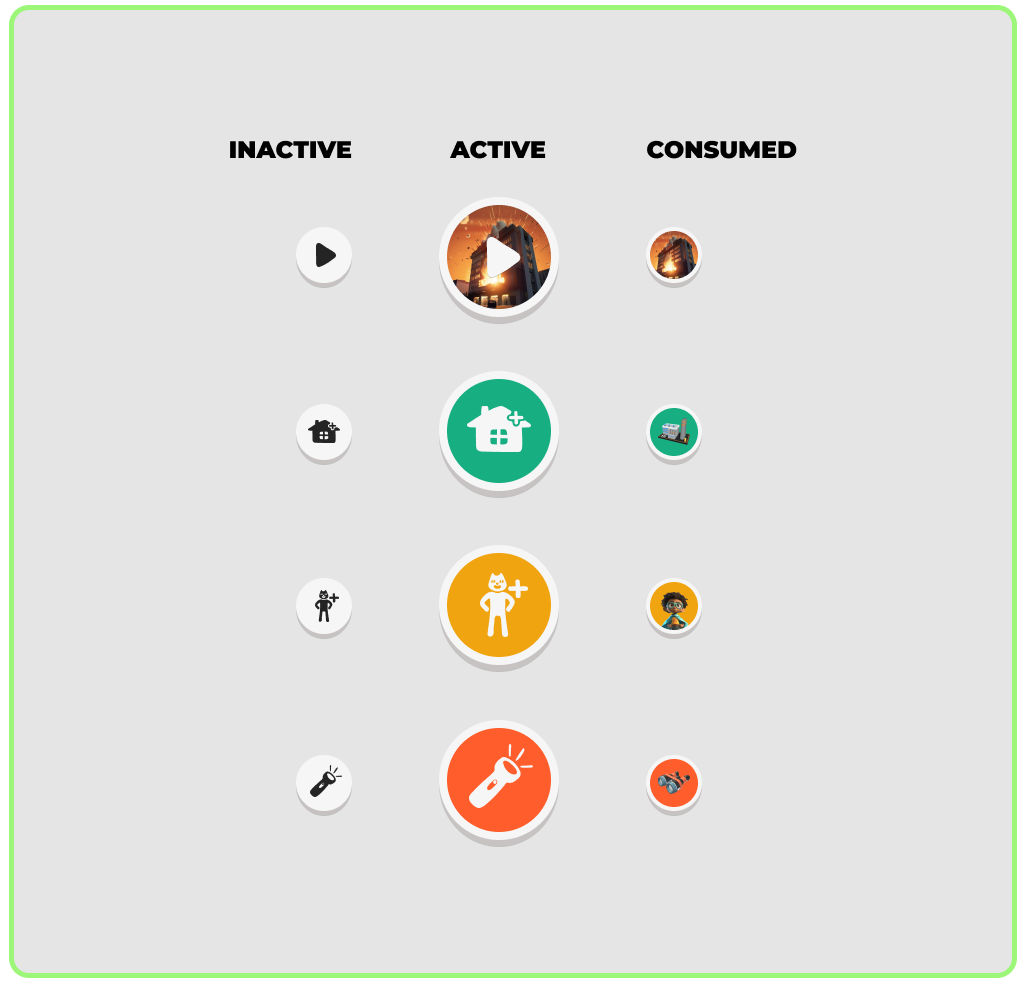

You create something that’s part of that chapter. This could be the villain, the best friend, the car, your home, etc.

What you create is integrated into the chapter, which builds upon the other parts. So if you created a best friend, then their name and traits that you picked gets integrated into the following chapter.

Some referred to this as an “interactive audiobook,” which is probably the cleanest description. It was a setup that did work in some ways, not so much in other ways. But it was the clearest and most easy to understand way of progressing while creating that we came upon.

As expected, kids rarely made it through the entire journey. However, there was excitement in creating their hero and creating the villain. But when we got to the stories, there was a big drop-off. The kids who made it past the first chapters really loved it, and we saw very long listening sessions. The challenge was that there were too few of them.

Technical Challenges & learnings of V1

Before moving on, here are just a few of the challenges we faced in creating an AI product 18 months ago with real users, and how we solved them. Some are still there, and some have been resolved.

The tools we used were basically two, and the quality, speed, and reliability they provided drove much of the quality of the experience:

OpenAI API

ElevenLabs synthetic voices

Speed

Before ElevenLabs updated their API to allow for streaming audio, we needed to wait for the full MP3 file to be created. This meant around 60 seconds of creation time.

To buy us time, whenever you created a hero, friend, villain, or anything else before an episode, we started two processes simultaneously: 1) creating the backstory for the new element, which was quite short and therefore quite fast, and 2) when the backstory was done by the LLM, we sent that to the story creation engine, which then started creating the second audio file.

So when you had listened to the backstory, the full story was almost complete. This allowed us to bypass some of the waiting time, though there were still instances when creation was too slow. This changed with the launch of streaming later in 2023.

Consistency

When trying to create an IP and a world, a few things are key, such as:

Characters behaving according to how their personalities were introduced

Names, places, and relationships being consistent with the history of the world

LLMs tend to easily forget, mix up, and/or create new characters, especially as the story expands and there are an increasing number of events.

Peter was the mastermind behind making this work, but he did it without using any fancy embeddings or RAG methods. We made sure to provide the right backstories and descriptions in the context window and linked them together when prompting the story.

The overall story arc was quite fixed, and each chapter had a clear plot and participants, which reduced the challenge of flexibility.

Varying the storyline, yet providing surprises

The downside of this method was that even if you played the story again with the same superhero, you got similar stories. The differences were in how the hero solved the problem in each story—for instance, if your superpower was the power of condiments versus shooting candy from your eyes.

In terms of flexibility and variation, we leaned toward consistency in the world, which provided fewer variations in the stories.

Safety

As this is a kids' product that opens up creative possibilities, it was important to address TTP. So what did we do to create a safe space?

We had a long list of words and names that were part of the initial content filter.

We ran an AI prompt to check if combinations of words not in the filter could be seen as harmful in any way.

These two actions created a fairly robust content filter—maybe even too robust, especially the second filter, which could sometimes go overboard on safety measures.

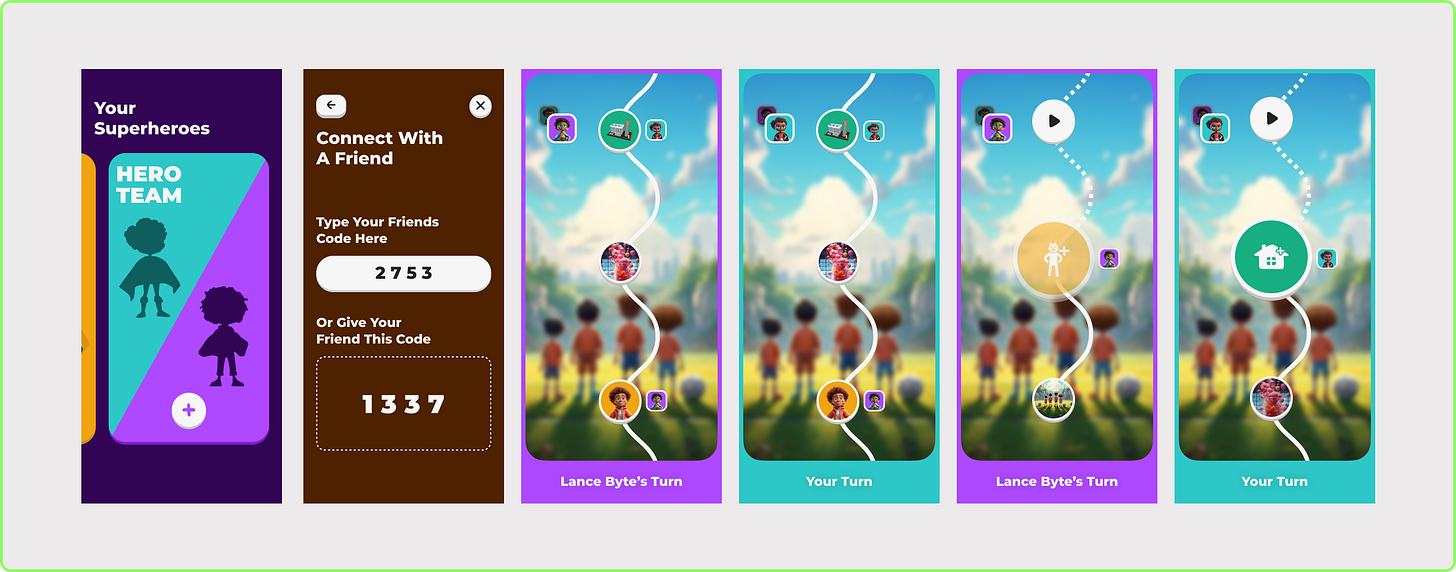

Idea we liked but never got to: Multiplayer

The idea of doing things together was a principle we always wanted to support. This was a simple take on it, using the same structure as single-player mode but adding a friend's code and simple turn-taking to avoid any deeper privacy challenges. We built 80% of this but never shipped it unfortunately.

Kids challenges of V1

We launched this app in the app store while also doing multiple play tests with kids and talked to many families about the experience. Conclusion was that three a

1. The audio experience

If you see in my post about the first app we built and the content there, in the sound engineering, we have multiple voices, a dialogue driven story and a lot of sound effects going on in the background to create an engaging story. Example below.

Compared to the AI experience, and think about it that it’s not a small clip but several minutes, then it magnifies the somewhat monotone voice.

We did attach an intro and outro song to make it more entertaining. But this experience contributed to that we got comments from kids that “the voice was boring”. Even if from a technical achievement it sound so much better than anything we’ve heard before, for a kid it doesn’t really matter.

This is of course what the NotebookLM has been praised for so much, that it has two hosts that goes back and forth around the subject. Getting that variation in voices is key to make it ok to listen to.

2. The story experience

We had a clear scripted sequence of events for each chapter, still the difference in drama and setup compared to a human writer is/was still not good enough. And in audio, when you don’t have images to look at, getting attached to the story, drawn into it and letting it build a world is the thing - so even if that’s just 10% off it still the difference from a successful story and failed one.

3. Character creation was the opportunity

But there was a third feedback point as well, that is that you wanted to do more with the characters. The real fun things was to create, to make characters, places and objects. These things got these absurd and fun backstories that was really exciting. So we wanted to try and make them the heart of it all. They were shorter and required less from the LLM in story structure, but made bizarre inputs structured and professional. Which is kind of amazing.

We thought we wouldn’t be able to really make a difference on the first two feedback points without our partners doing significant breakthrough in their models, at least not within the time frame and resources we had available.

The Pivot: Superland V2

The New Approach

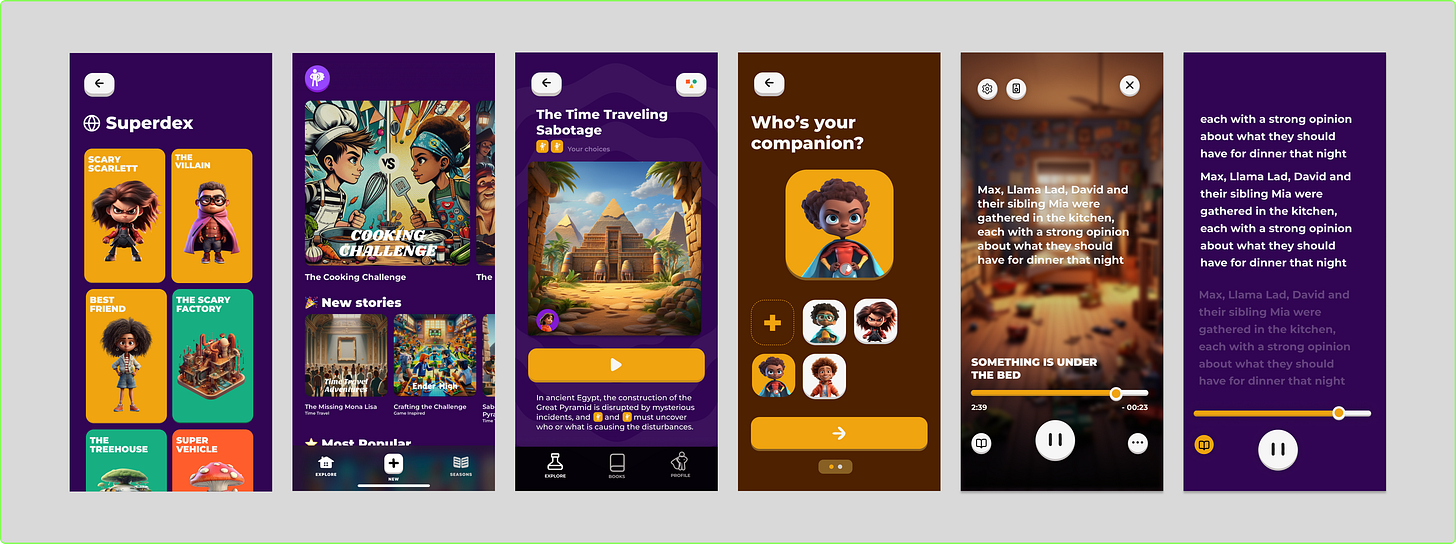

So we then took a different route. Instead of following a strict path, we opened things up, allowing kids to create objects, places, and characters, which they could plug into any story they wanted. This new approach let them build and reflect, creating worlds before jumping into a story. This became V2 of Superland, an app that among other things App of the day earlier this year. It gave users the freedom to craft family settings, mystical places, and unique objects, then use them to weave together narratives.

Principles and Enablers:

Create and connect: We felt that what you really connected with was the characters. You wanted to put these characters in different stories. Consistent characters, places and objects. Varying stories.

New every time: We wanted to create a “new” feeling every time you entered, that there were new stories available. Easier to create new storylines.

Elevenlabs had streaming available in their API, which meant we could create stories much faster

GPT-4 had come out, stories could be more complex.

This was different from traditional “choose your own adventure” formats. It allowed for a more open-ended exploration, where kids could listen, reflect, and build. The combination of passive listening with active creation. This pattern is similar to what we see in LLM Notebook and many other creative AI tools.

What Worked

Character, Places and Objects Creation: A lot of kids created a lot of stuff. This was still a very fun experience. You get to design and craft the character and then get quite an entertaining story about the place, object or character. This was good.

Increased Variation in V2: Introducing diverse storylines in V2 brought freshness and variety, keeping the experience lively and fun. Which gave us the ability to “launch” new things in the product.

What We Missed

Missed Depth in Characters and Stories: The stories missed the marked on creating layers and different ways to connect to the story. It needed to do everything of setting the world, the plot, the conflict and the resolution in a too short of a time frame to be successful at anything. Which limited the emotional investment and connection with the characters.

One-Off Storylines: Connected to the first point is that by focusing on short, one-time storylines, we missed opportunities for extended, impactful narratives, leading to only light engagement.

Imbalance in Depth vs. Variation: V1 had limited variation with a single storyline, while V2 had too much variation but not enough depth, resulting in a lack of meaningful engagement.

These are a lot of the same mistake that I see in many of the AI story creators right now. It’s really easy to make a story, but there is no depth to it or a deeper relationship to the characters. Hence, pre-school is a natural place for it to be used but more challenging for older audiences. But it still feels like the potential is to build something that has more story and more depth to it.

What did I learn building these early AI products?

Models are good enough today: I’m very much of the sentiment that even if the model development stop now, there’s so much to build on top of what we have today and so little that has been built on them. We have explored so little of what they can do still and there’s so much to build just what exists today.

Motivational layer is everything: The Duolingo CEO talked about their app as “the motivional layer” to learn a new language. That feels very relevant to these experience. You can read a book or listen to an audiobook today, easily. But how do we motivate you going through it even more? The UI/application layer really is everything and that defines a lot of the success.

Build to the model’s strengths: there are limitations on how these models act, what their good at and what they’re still not so good at. Choose the strengths and build around the limitations. It has magical powers, enabling the magic and hiding the weakness can create amazing things.

These three posts were a closure with the past two years. What’s next in this space? Now I’m planning to write about areas that interest me and use this space as a research area. Follow along.

Character.ai is a chatbot and entertainment with both usage and retention, so you could make the case for that’s the only thing that works. You could also make the case that their use cases and outcomes are something we want to keep far away from kids.